On-chip Accelerators in 4th Gen Intel® Xeon® Scalable Processors: Features, Performance, Use Cases, and Future!

Magnolia 14 at the Marriott World Center - Orlando, FL

2:00-5:30pm - June 17th, 2023

Abstract

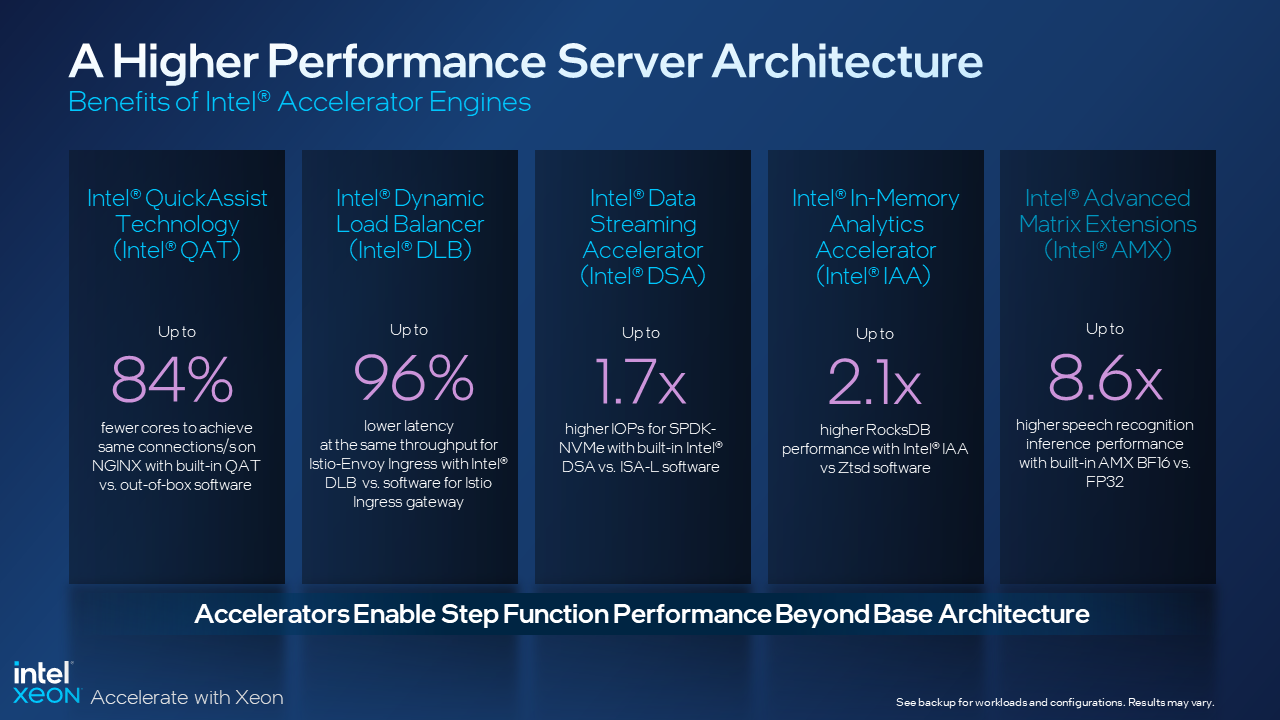

Workloads are evolving — and so is computer architecture. Traditionally, adding more cores to your CPU or choosing a higher-frequency CPU would improve workload performance and efficiency, but these techniques alone can no longer guarantee the same benefits they achieved in the past. Modern workloads place increased demands on compute, network and storage resources. In response, a growing trend exists to deploy power-efficient accelerators to offload specialized functions and reserve compute cores for general-purpose tasks. Offloading specialized tasks to AI, security, HPC, networking, analytics and storage accelerators can result in faster time to results and power savings.

As a result, Intel has integrated the broadest set of built-in accelerators in 4th Gen Intel® Xeon® Scalable processors to boost performance, reduce latency and increase power efficiency. Intel Xeon Scalable processors with Intel® Accelerator Engines can help your business solve today’s most rigorous workload challenges across cloud, networking and enterprise deployments.

This tutorial provides an overview of the latest built-in accelerators -- QuickAssist Technology (QAT), Dynamic Load Balancer (DLB), Data Streaming Accelerator (DSA), and In-memory Analytics Accelerator (IAA), -- and their rich functionalities supported by Intel 4th Gen Xeon Scalable Processors. With several flexible programming models and software libraries, these accelerators have been proven to be beneficial to a wide range of data center infrastructures and applications. In addition, the hands-on labs of this tutorial will take Intel DSA as an example to provide the attendees with the basic knowledge of how to configure, invoke, and make the most out of it with both microbenchmarks and an application.

Details

Intel's 4th generation Xeon processors (Sapphire Rapids) supports four primary accelerators located on-chip. These accelerators support key features to interact efficeintly with cores and memory:

- Intel Shared Virtual Memory (SVM) - SVM enables devices and cores to access shared data in software's virtual address space by IOMMU-based address translation, all while avoiding memory pinning and data copying.

- Intel Accelerator Interfacing Architecture (AiA) - AiA consists of a set of x86 instructions that efficiently dispatch, signal, and synchronize across CPU cores and accelerators from user space.

- Intel Scalable IO Virtualization (S-IOV) - S-IOV brings hardware acceleration for VM/containers and device communication, allowing for scalable sharing and direct access to these accelerators across thousands of VM/containers.

- Symmetric and Asymmetric Cryptography

- TLS1.3 elliptical curves Montgomery encryption (curve 25519 and curve 448)

- Data Compression with CnV for Deflate and LZ4/LZ4s algorithms and CnVnR for Deflate and LZ4s

- Lock-free multi-producer/multi-consumer operation

- Supports unordered, ordered, and atomic traffic types

- Multiple priorities for varying traffic types

- Various distribution schemes

- Move: Memory Copy, CRC32 Generation, Data Integrity Field (DIF) Operations, Dualcast

- Fill: Memory Fill

- Compare: Memory Compare, Delta Record Create, Delta Record Merge, Pattern Detect

- Flush: Cache Flush

Supported Features:

- Batch Processing: Offload a Batch descriptor that points to an array of work descriptors, where Intel DSA fetches the work descriptors from the specified virtual memory address and processes them.

- Cache Control: Specify whether output data is allocated in the cache or is sent to memory without cache allocation.

- Dedicated and Shared Work Queues: Shared work queues can be shared by multiple software components, while dedicated work queues are assigned to asingle software component at a time.

- Partial Descriptor Completion: SVM allows for software-controlled partial descriptor completion when page faults are encountered.

- Traffic Classes: User can both adjust accelerator resources and assign groups with different traffic classes to better match the memory device bandwidth characteristics.

- Compress: Memory Compress, Memory Decompress

- Encrypt: Memory Encrypt, Memory Decrypt

- Filter: Scan, Extract, Select, Expand

- CRC: CRC64

- Memory: Translation Fetch

Supported Features:

- Cache Control: Specify whether output data is allocated in the cache or is sent to memory without cache allocation.

- Compression Early Abort: An application may abord data compression early if the compression job looks likely to not achieve the desired level of compression.

- Dedicated and Shared Work Queues: Shared work queues can be shared by multiple software components, while dedicated work queues are assigned to asingle software component at a time.

- Traffic Classes: User can assign groups with different traffic classes to better match the memory device bandwidth characteristics.

Publications

A Quantitative Analysis and Guidelines of Data Streaming Accelerator in Modern Intel Xeon Scalable Processors

Reese Kuper, Ipoom Jeong, Yifan Yuan, Jiayu Hu, Ren Wang, Narayan Ranganathan, Nam Sung Kim

[PAPER] Open-access ePrint Archive (arXiv). April, 2023

Target Audience

The primary audience for this tutorial are those who work with, or conduct research on, large-scale systems like datacenters or cloud systems. Specifically, those who develop memory-intensive applications intended to be used in aforementioned environments would find the tutorial immensely useful and may find insiration through what these on-chip accelerators offer.

Outline

A link to the slide deck is available here.

Overview of On-Chip Accelerators

- Benefits, workloads, usages, and potential

- Intel's position and effort

Current Xeon Scalable On-Chip Accelerators

- Intel QAT

- Intel DLB

- Intel DSA

- Intel IAA

Future Direction

Intel DSA Live Demo

- Intel DSA discovery and configuration via accel-config utility

- Guide for a simple memory copy offload

- Advanced usage through batching and asynchronous use

- Example results from dsa-perf-micros microbenchmark

- Application demonstration

Organizers

Yifan Yuan (Intel) - He is a research scientist at Intel Labs. His research interest is computer architecture and systems, with a focus on emerging networking hardware and system software for modern data center. He has published multiple papers in top-tier architecture and systems conferences, as well as four US patents. Yifan Yuan received his PhD in computer engineering from University of Illinois, Urbana-Champaign.

Jiayu Hu (Intel) - She is a Software Network Engineer for Intel with six years of experience working on Data Plane Development Kit and open source communities. Jiayu specializes in optimizing Networked Systems via Intel architecture technologies

Ren Wang (Intel) - She is a staff research scientist at Intel Labs. Her research interests include improving performance and reducing power for processors, platforms and distributed systems, via both software and hardware architecture optimizations. Ren received her Ph.D degree in Computer Science at UCLA. Ren has more than 100 US and international patents, and published over 50 technical papers and book chapters.

Narayan Ranganathan (Intel) - He is a Principal Engineer in the Systems, Software and Architecture Lab in Intel Labs. He is responsible for architecture definition and software prototyping of current and upcoming platform features including accelerator technologies like the Data Streaming Accelerator (DSA). Narayan has been with Intel for 24+ years in a variety of roles including architecture, software and validation. He holds a Masters in Computer Engineering from Clemson University, USA.

Reese Kuper (UIUC) - He is a second year Ph.D. student at University of Illinois, Urbana-Champaign. He has been working at Intel as a part-time employee. His current research interests include energy-efficient CPU/GPU microarchitectures and memory system design.

Ipoom Jeong (UIUC) - He is a Postdoctoral Research Associate at the University of Illinois Urbana-Champaign and a member of IEEE. He received his Ph.D. degree in electrical and electronics engineering from Yonsei University, Seoul, South Korea, in 2020. His research experience includes a Hardware Engineer in memory business at Samsung Electronics and a Research Professor at the School of Electrical and Electronic Engineering, Yonsei University. His current research interests include high-performance computer system design, energy-efficient CPU/GPU microarchitectures, and memory/storage system design.

Nam Sung Kim (UIUC) - He is the W.J. ‘Jerry’ Sanders III – Advanced Micro Devices, Inc. Endowed Chair Professor at the University of Illinois, Urbana-Champaign and a fellow of both ACM and IEEE. His interdisciplinary research incorporates device, circuit, architecture, and software for power-efficient computing. He is a recipient of many awards and honors including MICRO Best Paper Award, ACM/IEEE Most Influential ISCA Paper Award, and MICRO Test of Time Award. He is a hall of fame member of all three major computer architecture conferences, HPCA, MICRO, and ISCA.

Links

Introduction and Details: Intel QAT, Intel DLB, Intel DSA, Intel IAA

Specifications: Intel QAT, Intel DLB, Intel DSA, Intel IAA

User Guides: Intel QAT, Intel DLB, Intel DSA, Intel IAA

Drivers: Intel QAT, Intel DLB, Intel DSA, Intel IAA

Libraries and Utilities: QATZip, qatlib, QAT OpenSSL Acceleration, accel-config, DSA Transparent Offload (DTO)

Microbenchmarks: dsa-perf-micros

Example Use Cases:

- Intel QAT - Nginx HTTPs Tuning, Envoy Transport Layer Security, OpenSSL, Hadoop Application Acceleration

- Intel DLB - Network Rate Limiting, Elephant Flow, Scaling IPsec Workloads

- Intel DSA - DPDK Packet Fowarding, FD.io VPP Host Stack, Real-Time Video Transport

- Intel IAA - Tuning ClickHouse, Tuning RocksDB